Searching for Moral Dumbfounding:

Identifying Measurable Indicators of Moral Dumbfounding

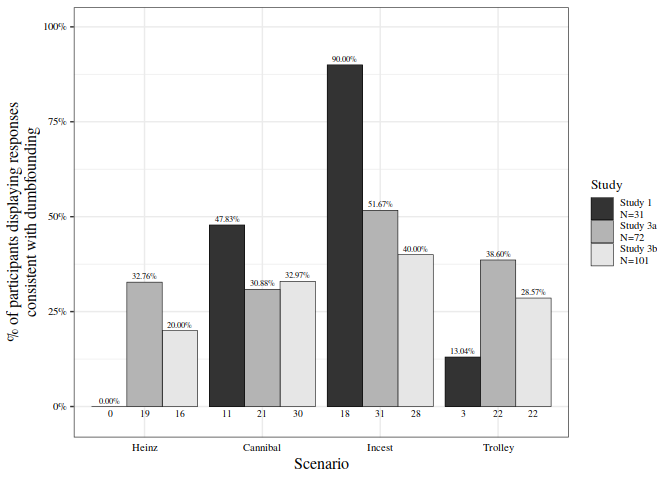

Moral dumbfounding is defined as maintaining a moral judgement, without supporting reasons. The most cited demonstration of dumbfounding does not identify a specific measure of dumbfounding and has not been published in peer-review form, or directly replicated. Despite limited empirical examination, dumbfounding has been widely discussed in moral psychology. The present research examines the reliability with which dumbfounding can be elicited, and aims to identify measureable indicators of dumbfounding. Study 1 aimed at establishing the effect that is reported in the literature. Participants read four scenarios and judged the actions described. An Interviewer challenged participants’ stated reasons for judgements. Dumbfounding was evoked, as measured by two indicators, admissions of not having reasons (17%), unsupported declarations (9%) with differences between scenarios. Study 2 measured dumbfounding as the selecting of an unsupported declaration as part of a computerised task. We observed high rates of dumbfounding across all scenarios. Studies 3a (college sample) and 3b (MTurk sample), addressing limitations in Study 2, replaced the unsupported declaration with an admission of having no reason, and included open-ended responses that were coded for unsupported declarations. As predicted, lower rates of dumbfounding were observed (3a 20%; 3b 16%; or 3a 32%; 3b 24% including unsupported declarations in open-ended responses). Two measures provided evidence for dumbfounding across three studies; rates varied with task type (interview/computer task), and with the particular measure being employed (admissions of not having reasons/unsupported declarations). Possible cognitive processes underlying dumbfounding and limitations of methodologies used are discussed as a means to account for this variability.

Searching for Moral Dumbfounding: Identifying Measurable Indicators of Moral Dumbfounding

Moral dumbfounding occurs when people stubbornly maintain a moral judgement, even though they can provide no reason to support their judgements (Haidt 2001; Haidt, Björklund, and Murphy 2000; Prinz 2005). It typically manifests as a state of confusion or puzzlement coupled with ( a ) an admission of not having reasons or ( b ) the use of unsupported declarations (“It’s just wrong!”) as justification for a judgement (Haidt, Björklund, and Murphy 2000; Haidt and Hersh 2001), particularly, when people encounter taboo behaviours that do not result in any harm. The classic and most commonly cited example involves an act of consensual incest between a brother and sister with the use of contraceptive (Incest). Another example (Cannibal) involves an act of cannibalism with a body that is already dead and is due to be incinerated the next day (Haidt, Björklund, and Murphy 2000).1

Defining and Measuring Moral Dumbfounding

Definitions of moral dumbfounding vary within the moral psychology literature. It was originally defined as “the stubborn and puzzled maintenance of a judgment without supporting reasons” (Haidt, Björklund, and Murphy 2000, 2; see also, Haidt and Hersh 2001, 194; Haidt and Björklund 2008, 197). Some authors cite the original definition verbatim (e.g., Jacobson 2012; Royzman, Kim, and Leeman 2015); others include the maintenance of a moral judgement despite the absence of supporting reason, but omit any reference to stubbornness or puzzlement (e.g., Cushman, Young, and Hauser 2006; Dwyer 2009; Gray, Schein, and Ward 2014; Haidt 2007; Wielenberg 2014); and some refer to confidence in the judgement, but again, omit any reference to stubbornness or puzzlement (e.g., Cushman, Young, and Greene 2010; Hauser et al. 2007; Hauser, Young, and Cushman 2008; Pizarro and Bloom 2003; Sneddon 2007).

It is apparent from the literature that there is no single, agreed definition of moral dumbfounding. That said, an absence of reasons for, or an inability to justify or defend, a moral judgement, is consistently identified across definitions. However, even despite this apparent consistency, there remains considerable variation in the language used to describe this “failure to provide reasons for a moral judgement”. Indeed, the lack of definitional specificity has led to differing interpretations of moral dumbfounding. It also allows for the possibility of disagreement relating to the implications, both theoretical and practical, of moral dumbfounding.

According to the original definition, moral dumbfounding is “the stubborn and puzzled maintenance of a judgment without supporting reasons” (Haidt, Björklund, and Murphy 2000, 2). This definition contains four separate elements: (i) stubbornness; (ii) puzzlement; (iii) maintaining of the judgement; and (iv) the absence of supporting reasons. Of these individual elements, stubbornness and puzzlement, arguably, emerge as consequences of the combination of the maintenance of the judgement in the absence of supporting reasons. If a person maintains a judgement in the absence of reasons (and this absence of reasons has been pointed out to them) they will be perceived as stubborn; and, if a person becomes aware that they do not have reasons for their judgement, they may become puzzled.

Following this, and in line with the wider literature, the combination of elements (iii) and (iv), the maintenance of the judgement in the absence of supporting reasons are identified as essential elements of dumbfounding. This does not mean that stubbornness and puzzlement should be ignored entirely; accounting for them may be useful in differentiating between a failure to provide reasons and a refusal to provide reasons. However, viewing stubbornness and puzzlement as consequences of the maintenance of a judgement in the absence of supporting reasons, indicates that they are subsequent to, and not a necessary part of, moral dumbfounding.

This view of dumbfounding includes the elements of the phenomenon that are mentioned the most frequently within the wider literature. It is also consistent with the way dumbfounding is described in the original study by Haidt, Björklund, and Murphy (2000). They report interesting variation in a number of non-verbal behaviours that may be linked with stubbornness or puzzlement, but beyond these, they do not offer a specific indication of how stubbornness and puzzlement are operationalised. Furthermore, other than appearing in the introductory definition for dumbfounding, in the abstract, (Haidt, Björklund, and Murphy 2000, 2), the terms “stubborn” and “puzzled” do not appear again for the remainder of the paper, suggesting that they are not core elements of the phenomenon.

Haidt, Björklund, and Murphy (2000) report a range of responses that may illustrate a state of dumbfoundedness (admissions of not having reasons and unsupported declarations), however, they do not provide details of the numbers of participants they classified as dumbfounded, or specific response that may be used to make such a classification. The numbers of participants who provided admissions of not having reasons are reported, however it is unclear whether or not this may be taken as a specific measure of dumbfounding or even if such a measure exists. This vagueness in the initial operationalisation of dumbfounding is reflected in the wider literature, whereby evidence of, or, illustrations of, dumbfounding include unsupported declarations (Haidt 2001, 817; Prinz 2005, 101), and tautological reasons (“because it’s incest”; Mallon and Nichols 2011, 285). The current research aims identify specific measurable responses that may be used as indicators of dumbfounding.

Drawing on the work of Haidt, Björklund, and Murphy (2000), and the wider literature, the absence of supporting reasons appears to present in two distinct ways. Firstly, and non-controversially, participants may become aware that they do not have reasons and acknowledge this (admissions of not having reasons). Secondly, participants may fail to provide reasons. Measuring this failure to provide reasons is more problematic; if a participant does not admit to not having reasons, they attempt to disguise their failure to identify reasons. The use of unsupported declarations or tautological reasons as justifications for a judgement may be identified as a failure to provide reasons. Stating “it’s just wrong” or “because it’s wrong” does not answer the question “do you have a reason for your judgement?” (Mallon and Nichols 2011, 285).

The earliest evidence for moral dumbfounding emerged indirectly as a result of a study by Haidt, Koller, and Dias (1993). This was a cross-cultural study examining the variability of the moral judgements of participants depending on age, socio-economic status, and nationality (USA or Brazil). Participants were presented with a range of moral scenarios, some of which were offensive, but harmless; for example, cutting up a national flag (Brazil or USA, matched to sample) and using it to clean the bathroom; a family eating their dog after it was killed by a car; and, a brother and sister kissing each other on the mouth. When asked to justify their condemnation of certain actions, some participants (from both countries) used unsupported declarations as a reason; for example, “Because it’s wrong to eat your dog” or “Because you’re not supposed to cut up the flag” (Haidt, Koller, and Dias 1993, 632). This study was not a direct study of moral dumbfounding, rather it was investigating differences in the way people reason about moral scenarios. The use of unsupported declarations in response to some moral scenarios was noted among a range of responses (Haidt, Koller, and Dias 1993).

A later study, by Haidt, Björklund, and Murphy (2000), directly investigated the phenomenon of moral dumbfounding. In their study two moral scenarios (Incest and Cannibal: see Appendix A) designed to elicit strong emotional reactions, but with no identifiable harmful consequences (emotional intuition scenarios), were contrasted against a traditional moral judgement scenario (Heinz) that involved balancing the interests of two people (reasoning scenario). They observed differences in responses between the two types of scenarios, participants were better at defending their judgement for the reasoning scenario than for the emotional intuition scenarios. It appeared that these emotional intuition scenarios could elicit dumbfounding as evidenced by significant increases in ( a ) admissions of having no reasons for a judgement, or ( b ) the use of unsupported declarations (“it’s just wrong”) as a justification for a judgement (Haidt, Björklund, and Murphy 2000, 12). Although interesting, that study (consisting of a final sample of thirty participants) has not been published in peer reviewed form and has not been replicated.2

The following year, Haidt and Hersh (2001) investigated differences between conservatives and liberals, across a range of responses to moral issues, and found that conservatives produced more dumbfounded type responses (e.g., stuttering, stating “I don’t know”, admitting they could not explain their answers (Haidt and Hersh 2001, 200), than liberals when discussing particular issues. Although this study did not investigate dumbfounding directly, the findings indicate that there may be individual differences that drive moral judgements which have not yet been fully investigated.

The phenomenon of moral dumbfounding has been widely discussed in the moral psychology literature (e.g., Cushman 2013; Cushman, Young, and Hauser 2006; Cushman, Young, and Greene 2010; Hauser et al. 2007; Prinz 2005; Royzman, Kim, and Leeman 2015), but there is limited available empirical information about the nature of moral dumbfounding and the reliability with which it can be elicited in everyday human behaviour. Some authors have argued that moral dumbfounding does not really exist (Gray, Schein, and Ward 2014; Jacoby 1983; Sneddon 2007; Wielenberg 2014; see also Royzman, Kim, and Leeman 2015).3 The studies described in the present paper aim to replicate the initial interview study of Haidt, Björklund, and Murphy (2000), and to explore practicable methods for testing the phenomenon, and its variability, in larger sample sizes. This will allow for more detailed study of the phenomenon. A deeper understanding of dumbfounding will inform the continuing development of theories of moral judgement, furthering our understanding of the interactions between intuitions and reasoned judgements in the way in which people make moral evaluations.

Moral dumbfounding is used as supporting evidence for a range of “intuitionist” theories of moral judgement (e.g. Cushman, Young, and Greene 2010; Haidt 2001; Prinz 2005).According to these intuitionist theories, our moral judgements are grounded in an emotional or intuitive automatic response rather than slow deliberate reasoning (Cameron, Payne, and Doris 2013; Crockett 2013; Cushman 2013; Cushman, Young, and Greene 2010; Greene 2008; Haidt 2001; Prinz 2005). Two of the most influential such theories of moral judgement have been Haidt’s social intuitionist model (Haidt 2001; Haidt and Björklund 2008) and Greene’s dual processes model (Greene 2008, 2013; Greene et al. 2001). Haidt (2001) in his social intuitionist model likens the distinction between fast moral intuitions and slow moral reasoning to the distinction between fast and slow thinking that appears in dual systems theories of cognition (Chaiken 1980; Epstein 1994; Zajonc 1980; see also Chaiken and Trope 1999; Haidt 2001; Kahneman 2011). In introducing and defending this model, Haidt makes specific reference to one of the dumbfounding scenarios, and the findings from the unpublished manuscript relating to this dilemma (Haidt 2001; see also Haidt and Björklund 2008; Haidt and Hersh 2001). Greene draws heavily on Haidt’s work in defending his dual-process model of moral judgement (Greene 2008). In more recent years, Cushman (2013; Cushman, Young, and Greene 2010) and Crockett (2013), building on the work of Haidt and Greene have continued the development intuitionist/dual-process theories of moral judgement (Crockett 2013; Cushman 2013; Greene 2008; Haidt 2001).

The current research, following from Cushman (2013) and Crockett (2013), takes moral intuitions as “model-free” (Crockett 2013, 364; Cushman 2013, 284) or habitual responses, emerging through a long history of reinforcement learning. According to this approach, consistent with other research on implicit learning (Berry and Dienes 1993; Reber 1989; Sun, Slusarz, and Terry 2005; see also Barsalou 2008, 2009, 2003; Evans 2003), the learning of a moral norm, leading to the emergence an associated moral intuition, can occur independently of the learning of the reasons for, or explicit rules surrounding the norm. Attributing moral judgements to intuitions in this way also means that moral reasoning does not necessarily cause moral judgements, rather, at least in some circumstances, reasoning is likely to occur post-hoc.

However, the claim that reasons for intuitions are learned independently of the intuition does not necessarily imply that there are no reasons for a given intuition. This leads to two difficulties in demonstrating this separation between intuitions and reasons for the intuition have been identified. Firstly, in many circumstances, it is possible to trace the emergence of a given social or moral norm to particular reasons. Pizarro and Bloom (2003) defend the claim that moral intuitions may be rational, and informed by prior reasoning or deliberation. A related, more general claim is that deliberative (model-based) responses can, over time, become automatic or habitual (e.g., Barsalou 2003; Cushman 2013; Dreyfus and Dreyfus 1990). Secondly, in many cases, after an intuitive judgement is made, reasons that are consistent with the judgement may be through post-hoc rationalisation (e.g., Cushman et al., 2006). This means that, although there is a clear theoretical case for a separation between intuitions and reasons for these intuitions, demonstrating this separation is problematic.

Moral dumbfounding, however, is a phenomenon that may demonstrate this separation between an intuition and reasons for the intuition. In certain cases, people maintain an intuition even though they cannot provide reasons for the intuitions. It is this standing, as a rare demonstration of a crucial theoretical point, that makes moral dumbfounding so interesting. Moral dumbfounding therefore, provides evidence in support of the claim that moral intuitions are habitual and “model-free” (Crockett 2013, 364; Cushman 2013, 284). Demonstrating this separation between intuitions and reasons for the intuitions also demonstrates a separation between intuitions and the reasoning process, providing evidence for the suggestion that moral judgements are not necessarily dependent upon moral reasoning and by extension, providing implicit evidence that moral reasoning occurs post-hoc.

The existence of moral dumbfounding, therefore, is compelling evidence for intuitionist theories of moral judgement. These theories are supported by a large body of other empirical evidence, however, they are also either directly (e.g., Cushman, Young, and Greene 2010; Haidt 2001; Hauser, Young, and Cushman 2008; Prinz 2005) or indirectly (e.g., Crockett 2013; Cushman 2013; Greene 2008, 2013) grounded in the assumption that moral dumbfounding is a real phenomenon. The present research aims, to test the validity of the claim that moral dumbfounding is a real phenomenon through an attempted replication of the widely-cited unpublished study by Haidt, Björklund, and Murphy (2000). This will also test the strength of existing moral theories grounded in its existence. In addition to this, we aim to identify specific, measurable indicators of dumbfounding and develop practicable methods for eliciting and measuring dumbfounding in larger samples. These may be used to explore the phenomenon in greater depth, informing the further development of moral theory.

In recent years moral dumbfounding has been challenged by a number of authors (e.g., Gray, Schein, and Ward 2014; Jacobson 2012; Sneddon 2007; Wielenberg 2014), arguing, in line with rationalist theories of moral judgement (Narvaez 2005; Kohlberg 1971; Topolski et al. 2013), that moral judgements are grounded in reasons. Recent work by Royzman, Kim, and Leeman (2015), involving a series of studies focusing on the Incest dilemma, identified two reasons that may be guiding participants’ judgements. The reasons identified were: ( a ) potential harm – where participants believed that harm could arise as a result of the actions of the characters in the scenario despite the vignette stating that no harm arose; and ( b ) normativity – where citing a moral norm is seen as sufficient justification for making a judgement consistent with that norm. They found, that, when participants who endorsed either of these reasons were excluded from analysis, there were only four participants (from a sample of fifty-three) who rated the behaviour as wrong without offering a reason. Following a subsequent interview, two of these participants changed their judgement, and one changed her response to the question relating to normative reasons. This left just one participant who maintained that the behaviour was wrong without valid reason and, in their view, could be truly identified as dumbfounded. Consequently, they argue that dumbfounding is not as prevalent a phenomenon as portrayed by Haidt et al. (2000; Royzman, Kim, and Leeman 2015, 310). In identifying reasons that appear to be guiding people’s judgements, they claim to have found evidence for rationalist theories of moral judgement (Royzman, Kim, and Leeman 2015, 311) over intuitionist theories. They argue that the dumbfounded behaviours observed by Haidt, Björklund, and Murphy (2000) can be attributed to social pressure that exists in an interview setting, whereby participants accept the counter-arguments offered by the interviewer, even if they disagree, in order to appear cooperative (Royzman, Kim, and Leeman 2015, 299).

Royzman, Kim, and Leeman (2015) successfully identified reasons (harm-based reasons; normative reasons) that may underlie moral judgements in the case of the Incest dilemma, showing that, in the vast majority of cases, participants who rate the behaviour as wrong also endorse these reasons if given the opportunity. It is not surprising that instances of moral dumbfounding – defined as the maintaining a moral judgement without providing supporting reasons – can be dramatically reduced by providing participants with reasons for them to endorse (particularly in view of the extensive literature on confabulation, e.g., Evans and Wason 1976; Gazzaniga and LeDoux 2013; Johansson et al. 2005; Nisbett and Wilson 1977; Wilson and Bar-Anan 2008). If a participant endorses a reason that is consistent with their judgement this does necessarily not mean that this reason contributed to the making of the judgement. Whether or not participants are able to articulate or volunteer these reasons, without external prompts, has not been the subject of careful empirical investigation. The degree to which people falsely attribute every-day judgements to reasons, that are more accurately described as post-hoc rationalisations, is well documented (Greene 2008; Johansson et al. 2005; Nisbett and Wilson 1977).

The inability of people to articulate principles that are consistent with, and therefore may arguably be guiding moral judgements has been documented in a study by Cushman, Young, and Hauser (2006). They identified three distinct principles that appear to guide moral judgements; these are: ( a ) harm caused by action is worse than harm caused by omission; ( b ) harm intended is worse than harm foreseen; ( c ) harm involving physical contact is worse than harm without physical contact. They conducted a series of studies in which participants’ judgements were largely consistent with these principles. Interestingly, however, when questioned afterwards, participants were only reliably able to articulate two of these principles ( a ) and ( c ). Principle ( b ), while consistent with the judgements made, was not well articulated by participants. It appears that, making judgements consistent with a principle does not imply that participants can articulate this principle. It is this inability to articulate principles or reasons for a moral judgement that is the hallmark of moral dumbfounding and is of key interest in the current research.

In response to the limited number of demonstrations of, and related uncertainty surrounding moral dumbfounding, the primary aims of the current research are to ( a ) to identify specific measurable indicators of moral dumbfounding; and ( b ) use these measures to examine the reliability with which dumbfounded responding can be evoked. We conducted four studies, each of which is a modified replication attempt of the original moral dumbfounding study (Haidt, Björklund, and Murphy 2000). In these studies, dumbfounding is measured according to two sets of responses: ( a ) an admission of having no reasons for a judgement (a measure of self-reported dumbfounding) and, ( b ) use of unsupported declarations (”it’s just wrong”) or tautological reasons (“because it’s incest”) as a justification for a judgement (measures of a failure to provide reasons). Study 1 was designed to replicate Haidt et al.’s (2000) initial study using the original methods (face to face interview). In Study 2 we piloted alternative methods (a computer-based task) in an attempt to evoke moral dumbfounding in a systematic way with a larger sample. In Study 3a and 3b the materials that were piloted in Study 2 were refined and administered to a larger sample in an attempt to systematically evoke dumbfounded responding.

Study 1: Interview

The primary aim of Study 1 was to replicate the original dumbfounding study (Haidt, Björklund, and Murphy 2000). Four moral judgement vignettes were used (Appendix A). Three of these vignettes (Heinz, Incest, and Cannibal) were taken from Haidt, Björklund, and Murphy (2000). A fourth vignette (Trolley) was adapted Greene et al. (2001). Haidt, Björklund, and Murphy (2000) contrasted Heinz, a so-called reasoning scenario, against Cannibal and Incest, so-called intuition scenarios. Their study also included two tasks that did not have any moral content. For the purposes of consistency and balance, the non-moral tasks were omitted from the present study, and a second moral reasoning vignette was included in their stead, such that two reasoning vignettes (Heinz and Trolley) were contrasted against two intuition vignettes (Incest and Cannibal). We hypothesised that dumbfounding would be elicited and that rates of dumbfounded responding would vary depending on the content of the dilemma, with the intuition scenarios eliciting more dumbfounded responses than the reasoning scenarios. Two measures of dumbfounding were taken reflecting the two distinct ways in which absence of reasons may present: admissions of not having reasons (self-reported dumbfounding), and the use of an unsupported declaration (it’s just wrong) as a justification for a judgement, with a failure to provide any alternative reason when the unsupported declaration was questioned (a failure to provide reasons). As in the original study (Haidt, Björklund, and Murphy 2000), various non-verbal measures were also recorded in an attempt to account for stubbornness and puzzlement.

Method

Participants and design

Study 1 was a frequency based attempted replication. The aim was to identify if dumbfounded responding could be evoked. All participants were presented with the same four moral vignettes. Results are primarily descriptive. Any further analysis tested for differences in responding depending on the vignette, or type of vignette, presented.

A sample of 31 participants (15 female, 16 male) with a mean age of Mage = 28.83 (min = 19, max = 64, SD = 10.99) took part in this study. Participants were undergraduate students, postgraduate students, and alumni from Mary Immaculate College (MIC), and University of Limerick (UL). Participation was voluntary and participants were not reimbursed for their participation.

Procedure and materials

Four moral judgement vignettes were used (Appendix A). Three of the vignettes (Heinz, Incest, and Cannibal) were taken from Haidt, Björklund, and Murphy (2000). Incest was taken directly from the original study however Cannibal and Heinz were modified slightly, following piloting.

The original version of Cannibal stated that people had “donated their body to science for research”; participants during piloting were able to argue that eating does not constitute “research”. In order to remove this as a possible argument, the modified version stated that bodies had been donated for “the general use of the researchers in the lab” and that the “bodies are normally cremated, however, severed cuts may be disposed of at the discretion of lab researchers”.

Similarly, piloting suggested that participants agreed with the actions of Heinz and condemned the actions of the druggist. The original wording of Heinz suggested that any discussion related to Heinz as opposed to the druggist meaning that, for Heinz, participants would typically be defending an approval of the character’s actions. However, for Incest and Cannibal participants generally condemn the actions of the character and as such are defending a judgement of “morally wrong”. In order to ensure that participants were consistently defending a judgement of “morally wrong” across all scenarios, Heinz was modified to include “The druggist had Heinz arrested and charged”. Any discussion on Heinz then related to the character whose behaviour participants thought was wrong.

In the original study by Haidt, Björklund, and Murphy (2000), Incest and Cannibal are presented as “intuition” stories, and contrasted against a single “reasoning” dilemma: Heinz. In order for a more balanced comparison, a bridge variant of the classic trolley dilemma (Trolley) was included as a second “reasoning” dilemma. In this vignette, participants judge the actions of Paul, who pushes a large man off a bridge to stop a trolley and save five lives. The inclusion of Trolley meant that there were two “reasoning” dilemmas to be contrasted with the two “intuition” stories.

Sample counter arguments were prepared for each scenario. To ensure that participants were only pushed to defend a judgement of “morally wrong” these counter arguments exclusively defended the potentially questionable behaviour of the characters. A list of prepared counter arguments can be seen in Appendix B. A post-discussion questionnaire, taken from Haidt, Björklund, and Murphy (2000) was administered after discussion of each scenario (Appendix C).

Two other measures were also taken for exploratory purposes.: Firstly, in response to a possible link between meaning and morality (e.g., Bellin 2012; Schnell 2011), the Meaning in Life questionnaire (MLQ; Steger et al. 2008) was included. This ten item scale, is made up of two five item sub scales: presence (e.g., “I understand my life’s meaning”) and search (e.g., “I am looking for something that makes my life feel meaningful”). Responses were recorded using a seven point Likert scale ranging from 1 (strongly disagree) to 7 (strongly agree). Secondly, in line with Haidt’s (2007; see also, Haidt and Hersh 2001) work, describing a link between religious conservatism and moral views, it was hypothesised that incidences of dumbfounding may be moderated by individual differences in religiosity . As such, the seven item CRSi7 scale, taken from The Centrality of Religiosity Scale (Huber and Huber 2012) was also included. Participants responded to questions relating to the frequency with which they engage in religious or spiritual activity (e.g., “How often do you think about religious issues?”). Responses were recorded using a five point Likert scale ranging from 1 (never) to 5 (very often).

The interviews took place in a designated psychology lab in MIC and were recorded on a digital video recording device. Participants were presented with an information sheet and a consent form. The consent form required two signatures: firstly, participants consented to take part in the study (including consent to be video recorded); the second signature related to use of the video for any presentation of the research (with voice distorted and face pixelated). Only two participants opted not to sign the second part.

Participants read brief vignettes describing each scenario, and were subsequently interviewed regarding the protagonists. All four scenarios were discussed in a single interview session, with a brief pause between each discussion for the participant to complete a questionnaire about their judgements, and to read the next scenario. The conversation continued when they were happy to do so. Each of the four moral dilemmas Heinz, Trolley, Cannibal and Incest (Appendix A) were presented in this way and participants asked to judge the behaviour of the characters in the dilemmas. The order of presenting the scenarios was randomised. Judgements made by participants were challenged by the experimenter (“Nobody was harmed, how can there be anything wrong?”; “Do you still think it was wrong? Why?”; “Why do you think it is wrong?”; “Have you got a reason for your judgement?”). The resulting discussion continued until participants could not articulate any further arguments. Participants filled in a brief questionnaire after discussing each dilemma. In this they were asked to rate, on a seven point Likert scale, how right/wrong they thought the behaviour was; how confident they were in their judgement, how confused they were; how irritated they were; how much their judgement had changed; how much their judgement was based on reason; and how much their judgement was based on “gut” feeling. Participants completed a longer questionnaire at the end of the interview. This contained the MLQ (Steger et al. 2008), the Centrality of Religiosity Scale (Huber and Huber 2012), and some questions relating to demographics The entire study lasted approximately 20 to 25 minutes. The videos were analysed using BORIS – Behavioural Observation Research Interactive Software (Friard and Gamba 2015). All statistical analysis was conducted using R (3.4.0, R Core Team 2017b)4; SPSS (IBM Corp 2015) was also used.

Results and Discussion

The videos of the interviews were analysed and participants were identified as dumbfounded if they ( a ) admitted to not having reasons for their judgements; or ( b ) resorted to using unsupported declarations (“It’s just wrong!”) as justification for their judgements, and subsequently failed to provide reasons when questioned further. Table 1 shows the initial and revised ratings of the behaviours for each scenario.

Table 1 Ratings of each scenario for each study| Study | Judgement | N | percent | N | percent | N | percent | N | percent |

|---|---|---|---|---|---|---|---|---|---|

| Study 1 | Initial: Wrong | 27 | 87.1% | 25 | 80.65% | 26 | 83.87% | 23 | 74.19% |

| Initial: Neutral | 0 | 0% | 0 | 0% | 0 | 0% | 0 | 0% | |

| Initial: OK | 4 | 12.9% | 6 | 19.35% | 5 | 16.13% | 8 | 25.81% | |

| Revised: Wrong | 26 | 83.87% | 23 | 74.19% | 20 | 64.52% | 22 | 70.97% | |

| Revised: Neutral | 0 | 0% | 0 | 0% | 0 | 0% | 1 | 3.23% | |

| Reviesd: OK | 5 | 16.13% | 8 | 25.81% | 11 | 35.48% | 8 | 25.81% | |

| Study 2 | Initial: Wrong | 53 | 73.61% | 68 | 94.44% | 63 | 87.5% | 50 | 69.44% |

| Initial: Neutral | 9 | 12.5% | 3 | 4.17% | 3 | 4.17% | 6 | 8.33% | |

| Initial: OK | 10 | 13.89% | 1 | 1.39% | 6 | 8.33% | 16 | 22.22% | |

| Revised: Wrong | 51 | 70.83% | 67 | 93.06% | 66 | 91.67% | 48 | 66.67% | |

| Revised: Neutral | 7 | 9.72% | 3 | 4.17% | 3 | 4.17% | 9 | 12.5% | |

| Reviesd: OK | 14 | 19.44% | 2 | 2.78% | 3 | 4.17% | 15 | 20.83% | |

| Study 3a | Initial: Wrong | 54 | 75% | 67 | 93.06% | 61 | 84.72% | 48 | 66.67% |

| Initial: Neutral | 6 | 8.33% | 3 | 4.17% | 7 | 9.72% | 10 | 13.89% | |

| Initial: OK | 12 | 16.67% | 2 | 2.78% | 4 | 5.56% | 14 | 19.44% | |

| Revised: Wrong | 53 | 73.61% | 67 | 93.06% | 57 | 79.17% | 43 | 59.72% | |

| Revised: Neutral | 11 | 15.28% | 4 | 5.56% | 12 | 16.67% | 15 | 20.83% | |

| Reviesd: OK | 8 | 11.11% | 1 | 1.39% | 3 | 4.17% | 14 | 19.44% | |

| Study 3b | Initial: Wrong | 81 | 80.2% | 85 | 84.16% | 71 | 70.3% | 66 | 65.35% |

| Initial: Neutral | 9 | 8.91% | 13 | 12.87% | 20 | 19.8% | 14 | 13.86% | |

| Initial: OK | 11 | 10.89% | 3 | 2.97% | 10 | 9.9% | 21 | 20.79% | |

| Revised: Wrong | 87 | 86.14% | 82 | 81.19% | 73 | 72.28% | 59 | 58.42% | |

| Revised: Neutral | 10 | 9.9% | 15 | 14.85% | 19 | 18.81% | 17 | 16.83% | |

| Reviesd: OK | 4 | 3.96% | 4 | 3.96% | 9 | 8.91% | 25 | 24.75% |

Twenty two of the 31 participants (70.97%) produced a dumbfounded response (admission of having no reasons; or the use of an unsupported declaration as a justification for a judgement, with a failure to provide any alternative reason when the unsupported declaration was questioned) at least once. Examples of such responses included “It just seems wrong and I cannot explain why, I don’t know”, “because I just think it’s wrong, oh God, I don’t know why, it’s just [pause] wrong”. Table 2 shows the number, and percentage, of participants who displayed dumbfounded responses and non-dumbfounded responses for each dilemma. The rates of each type of dumbfounded response are also displayed. Figure 1 shows the percentage of participants displaying dumbfounded responses for each dilemma. Table 3 shows the responses to the questionnaires presented between dilemmas.

Table 2 Observed frequency and percentage of each of the responses: dumbfounded, nothing wrong, and reasons provided| N | percent | N | percent | N | percent | N | percent | ||

|---|---|---|---|---|---|---|---|---|---|

| Study 1 | Nothing wrong | 6 | 19.35% | 8 | 25.81% | 11 | 35.48% | 8 | 25.81% |

| Dumbfounded | 0 | 0% | 11 | 35.48% | 18 | 58.06% | 3 | 9.68% | |

| (admissions) | 0 | 0% | 8 | 25.81% | 10 | 32.26% | 3 | 9.68% | |

| (declarations) | 0 | 0% | 3 | 9.68% | 8 | 25.81% | 0 | 0% | |

| Reasons | 25 | 80.65% | 12 | 38.71% | 2 | 6.45% | 20 | 64.52% | |

| Study 2 | Nothing wrong | 8 | 11.11% | 4 | 5.56% | 2 | 2.78% | 10 | 13.89% |

| Dumbfounded | 45 | 62.5% | 46 | 63.89% | 54 | 75% | 45 | 62.5% | |

| Reasons | 19 | 26.39% | 22 | 30.56% | 16 | 22.22% | 17 | 23.61% | |

| Study 3a | Nothing wrong | 14 | 19.44% | 4 | 5.56% | 12 | 16.67% | 15 | 20.83% |

| (critical slide) | Dumbfounded | 13 | 18.06% | 14 | 19.44% | 18 | 25% | 14 | 19.44% |

| Reasons | 45 | 62.5% | 54 | 75% | 42 | 58.33% | 43 | 59.72% | |

| Study 3a | Nothing wrong | 14 | 19.44% | 4 | 5.56% | 12 | 16.67% | 15 | 20.83% |

| (coded) | Dumbfounded | 19 | 26.39% | 21 | 29.17% | 31 | 43.06% | 22 | 30.56% |

| Reasons | 39 | 54.17% | 47 | 65.28% | 29 | 40.28% | 35 | 48.61% | |

| Study 3b | Nothing wrong | 21 | 20.79% | 10 | 9.9% | 31 | 30.69% | 24 | 23.76% |

| (critical slide) | Dumbfounded | 12 | 11.88% | 19 | 18.81% | 16 | 15.84% | 16 | 15.84% |

| Reasons | 68 | 67.33% | 72 | 71.29% | 54 | 53.47% | 61 | 60.4% | |

| Study 3b | Nothing wrong | 21 | 20.79% | 10 | 9.9% | 31 | 30.69% | 24 | 23.76% |

| (coded) | Dumbfounded | 16 | 15.84% | 30 | 29.7% | 28 | 27.72% | 22 | 21.78% |

| Reasons | 64 | 63.37% | 61 | 60.4% | 42 | 41.58% | 55 | 54.46% |

Rates of observed dumbfounding for each scenario across each study.

| Study | Question | Heinz | Cannibal | Incest | Trolley |

|---|---|---|---|---|---|

| Study 1 | Changed mind | 2.87 | 3.40 | 2.63 | 2.60 |

| Confidence | 5.30 | 4.77 | 5.40 | 5.07 | |

| Confused | 3.00 | 3.67 | 3.33 | 3.70 | |

| Irritated | 3.00 | 3.33 | 3.13 | 3.37 | |

| ‘Gut’ | 5.23 | 5.20 | 4.97 | 5.07 | |

| ‘Reason’ | 4.83 | 4.40 | 4.43 | 4.77 | |

| Gut minus Reason | 0.40 | 0.80 | 0.53 | 0.30 | |

| Study 2 | Confidence | 6.10 | 5.86 | 5.62 | 5.26 |

| Confused | 2.40 | 3.08 | 4.14 | 3.17 | |

| Irritated | 4.58 | 4.68 | 4.32 | 4.28 | |

| ‘Gut’ | 5.29 | 5.54 | 5.82 | 4.96 | |

| ‘Reason’ | 4.89 | 5.19 | 4.89 | 4.93 | |

| Gut minus Reason | 0.40 | 0.35 | 0.93 | 0.03 | |

| Study 3a | Changed mind | 2.38 | 1.67 | 2.00 | 2.00 |

| Confidence | 5.22 | 5.50 | 5.38 | 4.81 | |

| Confused | 2.75 | 2.96 | 3.25 | 2.89 | |

| Irritated | 3.94 | 4.64 | 4.07 | 3.60 | |

| ‘Gut’ | 4.78 | 5.44 | 5.44 | 4.92 | |

| ‘Reason’ | 5.07 | 5.26 | 5.11 | 5.06 | |

| Gut minus Reason | -0.29 | 0.18 | 0.33 | -0.14 | |

| Study 3b | Changed mind | 1.74 | 1.60 | 1.57 | 1.83 |

| Confidence | 5.78 | 6.16 | 5.81 | 5.36 | |

| Confused | 2.06 | 2.07 | 2.12 | 2.22 | |

| Irritated | 4.42 | 4.01 | 3.56 | 3.39 | |

| ‘Gut’ | 4.42 | 4.43 | 4.47 | 4.01 | |

| ‘Reason’ | 5.46 | 5.69 | 5.26 | 5.58 | |

| Gut minus Reason | -1.04 | -1.27 | -0.79 | -1.57 |

In line with the original study (Haidt et al., 2000), the videos were also coded, by the primary researcher, across a range of measures. Haidt, Björklund, and Murphy (2000) report differences, between intuition and reasoning scenarios. They do not, however, report comparisons between participants identified as dumbfounded and participants not identified as dumbfounded. The current research, aiming to identify measurable indicators of dumbfounding, categorised participants as dumbfounded according to the two types of verbal responses (admissions and unsupported declaration) and compared these groups with participants who were not identified as dumbfounded, across a range of measures. There were two stages in this analysis. Firstly, all participants identified as dumbfounded were compared against participants who provided reasons only. Secondly, participants identified as dumbfounded were grouped according to type of dumbfounded response, and participants who did not rate the behaviour as wrong were also included in the analysis.

Judgement variables reported by Haidt, Björklund, and Murphy (2000) included the length of time until the first argument, the length of time until the first evaluation, the length of time between the first evaluation and the first argument. The current research reports the same judgement variables.

A range of “argument variables” were also reported. Identifying specific objectively verifiable measurable indicators for some of the “argument variables” reported by Haidt, Björklund, and Murphy (2000) was problematic (e.g., “dead-ends”, “argument kept”, “argument dropped”). The current research coded each verbal utterance according to a relevance for forming an argument. As such some of the argument variables reported by Haidt, Björklund, and Murphy (2000) are not reported here in the same way, however, related measures are reported.

Paralinguistic variables reported by Haidt, Björklund, and Murphy (2000) include frequency (per minute) of: “ums, uhs, hmms”, “turns with laughter”, “turns with face touch”, “doubt faces”, and “turns with pen fiddle”. As with the argument variables, the coding of the non-verbal/paralinguistic responses also varies slightly from what was reported by Haidt, Björklund, and Murphy (2000). We coded for both verbal hesitations (“um/em/uh”) and non-verbal hesitations/stuttering. “Turns” was coded independently of other behaviours as changing position. Laughter was coded for independently of changing position. The coding of hands touching the self was not limited to the face. Participants did not have pens to fiddle with, however we coded for generic fidgeting. The term “doubt faces” presented as problematic to code for rigorously across different individuals. As such, two distinctive and opposing facial expressions were coded for: smiling and frowning.

Dumbfounded versus reasons

Fifty nine cases of participants providing reasons, were compared with 32 cases of dumbfounded responding. There was no difference in time until first judgement between the dumbfounded group, (M = 14.89, SD = 20.41) and the group who provided reasons (M = 15.19, SD = 40.54), p = .969. Similarly, there was no difference in time until first argument between the dumbfounded group, (M = 39.20, SD = 28.90) and the group who provided reasons (M = 30.49, SD = 32.30), F(1, ,, , 81) = 1.42, p = .237, partial ()2 = .017. There was no difference in time from first judgement to time of first argument between the dumbfounded group, (M = 20.60, SD = 36.76) and the group who provided reasons (M = 15.65, SD = 46.42), p = .634.

There was a significant difference in frequency (per minute) of utterances whereby participants were working towards a reason between the dumbfounded group, (M = 1.47, SD = 1.45) and the group who provided reasons (M = 2.70, SD = 1.53), F(1, ,, , 89) = 13.82, p < .001, partial ()2 = .134. There was no difference in frequency (per minute) of irrelevant arguments between the dumbfounded group, (M = 1.03, SD = .74) and the group who provided reasons (M = .86, SD = .77), F(1, ,, , 89) = 1.05, p = .308, partial ()2 = .012. There was a significant difference in frequency (per minute) of expressions of doubt between the dumbfounded group, (M = .63, SD = .65) and the group who provided reasons (M = .31, SD = .58), F(1, ,, , 89) = 5.87, p = .017, partial ()2 = .062.

A one-way ANOVA revealed a significant difference in number of times per minute participants laughed between the dumbfounded group, (M = 2.81, SD = 2.84) and the group who provided reasons (M = 1.18, SD = 1.25), F(1, ,, , 89) = 14.35, p < .001, partial ()2 = .139. Similarly, a one-way ANOVA revealed a significant difference relative amount of time spent smiling (as a proportion of the total time spent on the given scenario) between the dumbfounded group, (M = .32, SD = .15) and the group who provided reasons (M = .16, SD = .14), F(1, ,, , 89) = 25.24, p < .001, partial ()2 = .221. Consistent with the results reported by Haidt, Björklund, and Murphy (2000), a series of one-way ANOVAs revealed no differences in verbal hesitations, F(1, ,, , 89) = 2.35, p = .129, partial ()2 = .026, non-verbal hesitations, p = .074, changing posture, p = .485, hands on the self, p = .864, frowning, p = .958, and fidgeting, F(1, ,, , 89) = 1.66, p = .201, partial ()2 = .018. A one-way ANOVA revealed a significant difference relative amount of time spent in silence (as a proportion of the total time spent on the given scenario) between the dumbfounded group, (M = .14, SD = .08) and the group who provided reasons (M = .09, SD = .06), F(1, ,, , 89) = 9.72, p = .002, partial ()2 = .098.

From the above analysis, it appears that, working towards reasons, expressions of doubt, laughter, smiling, and silence were the only measures that varied significantly depending on whether a person was identified as dumbfounded or provided reasons. Having identified differences between dumbfounded participants and participants providing reasons, the following analysis investigates if there are differences depending the type of dumbfounded response provided. participants who did not rate the behaviour as wrong are also included in the following analysis.

Variation between different types of dumbfounded responses

Four groups, based on overall reaction to scenarios, were identified: participants who did not rate the behaviour as wrong, participants who provided reasons, participants who provided unsupported declarations, and participants who admitted to not having reasons.

A one-way ANOVA revealed a significant difference in relative frequency of utterances whereby participants were working towards a reason depending on overall reaction to scenarios, F(3, ,, , 120) = 7.54, p < .001, partial ()2 = .159. Tukey’s post-hoc pairwise comparison revealed that participants who provided reasons were identified as working towards a reason significantly more frequently (M = 2.70, SD = 1.53) than participants who did not rate the behaviour as wrong (M = 1.76, SD = 1.48), p = .021, and more frequently than participants who provided unsupported declarations as justifications (M = .64, SD = .72), p < .001. There was no difference between participants who admitted to not having reasons (M = 1.90, SD = 1.56) and any of the other groups. A one-way ANOVA revealed no significant difference in relative frequency of expressions of doubt depending on overall reaction to scenarios, F(3, ,, , 120) = 2.17, p = .096, partial ()2 = .051.

A one-way ANOVA revealed a significant difference in relative frequency laughter depending on overall reaction to scenarios, F(3, ,, , 120) = 8.27, p < .001, partial ()2 = .171. Tukey’s post-hoc pairwise comparison revealed that participants who admitted to not having reasons laughed significantly more frequently (M = 2.41, SD = 2.00), than participants who provided reasons (M = 1.18, SD = 1.25), p = .039, and more frequently than participants who provided did not rate the behaviour as wrong (M = .97, SD = 1.29), p = .025. Similarly, participants who provided unsupported declarations laughed significantly more frequently (M = 3.57, SD = 4.00), than participants who provided reasons, p < .001, and more frequently than participants who did not rate the behaviour as wrong, p < .001. There was no difference between participants who provided reasons, and participants who did not rate the behaviour as wrong p = .951. Interestingly, there was no difference between participants who admitted to not having reasons and participants who provided unsupported declarations, p = .305.

A similar pattern of results was found for time spent smiling. A one-way ANOVA revealed a significant difference in relative time spent smiling depending on overall reaction to scenarios, F(3, ,, , 120) = 9.97, p < .001, partial ()2 = .200. Tukey’s post-hoc pairwise comparison revealed that participants who admitted to not having reasons spent significantly more time smiling (M = .33, SD = .14), than participants who provided reasons (M = .16, SD = .14), p < .001, and more time smiling than participants who provided did not rate the behaviour as wrong (M = .16, SD = .13), p < .001. Participants who provided unsupported declarations spent significantly more time smiling (M = .31, SD = .17), than participants who provided reasons, p = .008, and participants who did not rate the behaviour as wrong, p = .014. There was no difference between participants who provided reasons, and participants who did not rate the behaviour as wrong, p = 1.000. Again, there was no difference between participants who admitted to not having reasons and participants who provided unsupported declarations, p = .996.

A one-way ANOVA revealed a significant difference in relative amount of time spent in silence depending on overall reaction to scenarios, F(3, ,, , 120) = 3.31, p = .023, partial ()2 = .076. Mean proportion of interview time spent in silence are as follows: participants providing reasons, M = .09, SD = .06; participants not rating the behaviour as wrong, M = .12, SD = .07; participants admitting to not having reasons, M = .14, SD = .09; and participants providing unsupported declarations, M = .14, SD = .05. Tukey’s post-hoc pairwise comparison did not reveal any significant differences between specific groups.

Further analyses

An exploratory analysis revealed no association between number of times dumbfounded and score on either measures from the MLQ: Presence, r(31) = 0.74, p = .466, or Search, r(31) = 1.38, p = .179, or the Centrality of Religiosity Scale r(31) = 0.35, p = .726. There was no difference in observed rates of dumbfounded responses depending on the order of scenario presentation, χ2(6, N = 124) = 4.01, p = .676. Rates of dumbfounded responses varied depending on which moral dilemma was being discussed, χ2(6, N = 124) = 46.82, p < .001. The highest rate of dumbfounding was recorded for Incest, with 18 of the 31 (58.06%) participants displaying dumbfounded responses. Eleven participants (35.48%) displayed dumbfounded responses for Cannibal and three participants (9.68%) displayed dumbfounded responses for Trolley. The lowest recorded rate of dumbfounded response was for the Heinz dilemma, with no participants resorting to unsupported declarations as justification or admitting to not having reasons for their judgement. This trend is generally consistent with that which emerged in the original study (with the exception of Trolley, which was not used in the original study). Furthermore, rates of dumbfounded responding varied depending on which type of moral scenario was being discussed. Heinz and Trolley, identified as reasoning scenarios, were contrasted against the intuition scenarios Incest and Cannibal. There was significantly more dumbfounded responding for the intuition scenarios (29 instances) than for the reasoning scenarios (3 instances), χ2(2, N = 124) = 38.17, p < .001.

The aim of Study 1 was to examine the replicability of moral dumbfounding as identified by Haidt, Björklund, and Murphy (2000), and identify specific measurable responses that may be indicative of dumbfounding. The overall pattern of responses, and pattern of inter-scenario variability in responding resembled that observed in the original study. As such, Study 1 successfully replicated the findings of the original moral dumbfounding study (Haidt, Björklund, and Murphy 2000). Participants were identified as dumbfounded according to two specific measures, admissions of having no reasons, and unsupported declarations followed by a failure to provide reasons when questioned further. Both of these responses were accompanied by similar increases incidences of laughter, and time spent smiling, when compared to participants providing reasons, and participants not rating the behaviour as wrong. When taken together, these responses were also accompanied by more silence during the interview, when compared with participants who provided reasons. As such, it appears that identifying incidences of dumbfounding according to unsupported declarations or admissions of not having reasons largely capture dumbfounding as described by Haidt, Björklund, and Murphy (2000).

Study 1 provides evidence supporting the view that moral dumbfounding is a genuine phenomenon and can be elicited in an interview setting when participants are pressed to justify their judgements of particular moral scenarios. Two key limitations have been identified as a result of conducting studies in an interview setting. Firstly, conducting video-recorded interviews, and the accompanying analyses, is particularly labour intensive, which leads to a smaller sample size. The aims of the present research were to examine the replicability of dumbfounding, and to identify specific measurable indicators of dumbfounding. A sample size of thirty-one is not sufficient in fulfilling the first aim. Secondly, an interview setting introduces a social context that may influence the responses of participants, in that, participants may feel a social pressure to behave in a particular way (e.g., Royzman, Kim, and Leeman 2015). Alternative methods are required to examine dumbfounding with a larger sample, and whether it still occurs in the absence of the social pressure that is present in an interview setting. Two responses have been identified as indicators of dumbfounding. The degree to which each of these responses can be elicited in a setting other than an interview is investigated in Studies 2 and 3.

Having successfully elicited dumbfounded responses in a video recorded interview with a small sample, the aim of Study 2 was to devise methods that might elicit dumbfounding in a systematic way, using standardised materials and procedure that can be administered without the need for an interviewer. This will eliminate participant-interviewer interaction as a source of possible variability, remove the social pressure associated with an interview setting, and enable the study to be conducted with a larger sample. It was hypothesised that presenting participants with the same dilemmas and counter-arguments as in Study 1 as part of a computer task, as opposed to in an interview, would lead to a similar state of dumbfoundedness as found in Study 1. However, a major challenge to this alternative medium of conducting the study is identifying specific behavioural responses that are indicative of a state of dumbfoundedness that can be elicited and recorded. Without the benefit of an experimenter to guide the discussion, and a video recording that can be analysed, this challenge was addressed by developing a critical slide (described below). Scenarios and counter-arguments to commonly made judgements were presented on a sequence of slides before participants were asked to describe their judgement on a forced choice critical slide. Participants were identified as dumbfounded if they selected an unsupported declaration from a selection of three possible responses present on the critical slide, or if they provided an unsupported declaration as a reason.

Participants and design

Study 2 was a frequency-based, conceptual replication of Study 1. The aim was to identify if dumbfounded responding could be evoked via a computer-based task. All participants were presented with the same four moral vignettes. Results are primarily descriptive. Further analysis tested for differences in responding depending on the vignette, or type of vignette, presented.

A sample of of 72 participants (52 female, 20 male; Mage = 21.18, min = 18, max = 50, SD = 5.18) took part in this study. Participants were undergraduate students and postgraduate students from MIC. Participation was voluntary and participants were not reimbursed for their participation.

Procedure and materials

This study used largely the same materials as in Study 1. The four vignettes from Study 1 Heinz, Incest, Cannibal, and Trolley (Appendix A) along with the same prepared counter arguments (Appendix B) were used. Dumbfounding was measured using the critical slide. The critical slide contained a statement defending the behaviour and a question as to how the behaviour could be wrong (e.g., “Julie and Mark’s behaviour did not harm anyone, how can there be anything wrong with what they did?”). There were three possible answer options: ( a ) “There is nothing wrong”; ( b ) an unsupported declaration, naming the specific behaviour described in the scenario (e.g., “Incest is just wrong”); and finally a judgement with accompanying justification ( c ) “It’s wrong and I can provide a valid reason”. The order of these response options was randomised. Participants who selected ( c ) were then prompted on a following slide to type a reason. The selecting of option ( b ), the unsupported declaration, was taken to be a dumbfounded response, as was the use of an unsupported declaration as a justification for option ( c ).

This study made use of the same post-discussion questionnaire as in Study 1 (Appendix C). This was administered after the critical slide for each scenario. There was a change to one of the questions on this post-discussion questionnaire: the question asking if participants had changed their judgements was changed from “how much did your judgement change?” with a seven point Likert scale response to “did your judgement change?” with a binary “yes/no” response option. Both MLQ (Steger et al. 2008) and CRSi7 taken from The Centrality of Religiosity Scale (Huber and Huber 2012) were also used.

OpenSesame was used to present the vignettes and collect responses (Mathôt, Schreij, and Theeuwes 2012). The same four moral dilemmas (Appendix A) as in Study 1 were presented to participants (in randomised order). Following the presentation of each dilemma, participants were asked to judge, on a seven point Likert scale how right or wrong they would rate the behaviour of the characters in the given scenario. After making a judgement participants were then presented with a series of counter-arguments. Following these counter-arguments, participants were presented with the critical slide. Following the critical slide participants completed the same brief questionnaire as in Study 1 (between scenarios) in which they were asked to rate, on a seven point Likert scale, how right/wrong they thought the behaviour was; how confused they were; how irritated they were; how much their judgement had changed; how much their judgement was based on reason; and how much their judgement was based on “gut” feeling. When participants had completed all questions relating to all four dilemmas they completed the same longer questionnaire as in Study 1 containing the MLQ (Steger et al. 2008), the Centrality of Religiosity Scale (Huber and Huber 2012), and some questions relating to demographics. The entire study lasted approximately fifteen to twenty minutes.

Results and Discussion

Participants who selected the unsupported declaration on the critical slide were identified as dumbfounded. Table 1 shows the ratings of the behaviours across each scenario. Table 2 shows the number, and percentage, of participants who displayed “dumbfounded” responses (identified as the selecting of an unsupported declaration) and non-dumbfounded responses for each dilemma. Figure 1 shows the percentage of participants displaying dumbfounded responses for each dilemma. Table 3 shows the responses to the questionnaires presented between dilemmas. The open-ended responses provided by participants who selected option ( c ) “It’s wrong and I can provide a valid reason” were analysed and coded, by the primary researcher, and unsupported declarations provided here were also identified as dumbfounded responses. Following this coding, one additional participant was identified as dumbfounded for Trolley. Sixty eight of the 72 participants (94%) selected the unsupported declaration at least once. There was no statistically significant difference in responses to the critical slide depending on the order of scenario presentation, χ2(6, N = 288) = 4.13, p = .659. There was no statistically significant difference in responses to the critical slide depending on scenario presented, χ2(6, N = 288) = 9.00, p = .173. Rates of dumbfounded responding did not vary with type of moral scenario (100 instances for intuition scenarios, 90 instances for reasoning scenarios) being discussed, χ2(2, N = 288) = 6.58, p = .037. Forty five participants (62.5%) selected the unsupported for Heinz. Forty six participants (63.89%) selected (or provided) the unsupported declaration for Cannibal and Trolley. Fifty four participants (75%) selected the unsupported declaration for Incest. There was no association between number of times dumbfounded and score on either measure on the Meaning and Life questionnaire; Presence r(72) = -0.44, p = .662, or Search, r(72) = 1.12, p = .268, or the Centrality of Religiosity Scale r(72) = 1.24, p = .220.

The most striking result from this study was the willingness of participants to select the unsupported declaration in response to a challenge to their judgement. This is inconsistent with what was found in in both Study 1 and in the original study by Haidt, Björklund, and Murphy (2000). In these studies, participants did not readily offer an unsupported declaration as justification for their judgement, rather it was a last resort following extensive cross-examining. The exceptionally high rates of dumbfounding observed in Study 2 do not appear to be representative of the phenomenon more generally. There is, therefore, clearly a difference between offering an unsupported declaration as a justification for a judgement during an interview and selecting an unsupported declaration from a list of possible response options during a computerised task. It is possible that, during the interview, participants experienced a social pressure to successfully justify their judgement. This social pressure may also have made participants were more aware of the illegitimacy of using an unsupported declaration as a justification for their judgement. It is also possible that, seeing it written down as a possible answer legitimises selecting it as a justification for the judgement. The unsupported declaration does not provide an acceptable answer to the question on the critical slide, however, its presence in the list of possible response options may imply to participants that it is an acceptable answer, particularly if they do not put too much thought into it. By selecting the unsupported declaration participants can move quickly along to the next stage in the study without necessarily acknowledging any inconsistency in their reasoning, avoiding potentially dissonant cognitions (e.g., Case et al. 2005; Harmon-Jones and Harmon-Jones 2007; see also Heine, Proulx, and Vohs 2006). Selecting the unsupported declaration may also allow the participant to proceed without expending effort trying to think of reasons for their judgement beyond the intuitive justifications that had already been de-bunked.

Rates of dumbfounded responding in Study 2 were higher than expected. Possible reasons for this could be ( a ) reduced social pressure to appear to have reasons for judgements; ( b ) a failure of participants to comprehend that the unsupported declaration does not provide a logically justifiable response to the question asked in the critical slide; ( c ) the apparent legitimising of the unsupported declaration by its inclusion in the list of possible response options; or (d) the selecting by participants of an “easy way out” option without thinking about it fully (through carelessness/laziness/eagerness to move on to a less taxing task). It appears that the selecting of unsupported declarations is not an accurate measure of dumbfounding. In Study 1, participants were only identified as dumbfounded based on the providing of an unsupported declaration if they subsequently failed to provide further reasons when the unsupported declaration was questioned. However, in some cases, participants who provided unsupported declarations were not identified as dumbfounded, based on subsequent responses. A follow up analysis of the interview data revealed that 23 participants provided an unsupported declaration and proceeded to provide reasons for at least one of their judgements; a further six participants provided an unsupported declaration and proceeded to revise their judgement at least once. A stricter measure of dumbfounding, one by which participants are required to explicitly acknowledge a state of dumbfoundedness is necessary to address the issues with the selecting of an unsupported declaration that may have led to the unusually high rates of dumbfounding observed in Study 2.

Study 3a: Revised Computerised Task – College sample

Study 3a was designed in response to the unexpectedly high rates of observed dumbfounding in Study 2. Four limitations of the use of the unsupported declaration selection as a measure of dumbfounding were identified. It was hypothesised that replacing the unsupported declaration with an explicit admission of not having reasons would address each of these limitations, and bring the option selection more in line with conversational logic, making participants less willing to casually select the dumbfounded response. Making participants explicitly acknowledge the absence of reasons for their judgement means that their selecting of a dumbfounded response cannot be attributed to a mere misunderstanding and thus, might provide a truer measure of dumbfounding.

Method

Participants and design

Study 3a was a frequency based, modified replication. The aim was to identify if dumbfounded responding could be evoked. All participants were presented with the same four moral vignettes. Results are primarily descriptive. Further analysis tested for differences in responding depending on the vignette, or type of vignette, presented.

A sample of 72 participants (46 female, 26 male; Mage = 21.80, min = 18, max = 46, SD = 3.91) took part in this study. Participants were undergraduate students and postgraduate students from MIC. Participation was voluntary and participants were not reimbursed for their participation.

Procedure and materials

The materials in this study were almost the same as in Study 2 with a change to the “dumbfounded” response option on the critical slide. Extra questions were included following each of the counter-arguments. On the critical slide, the unsupported declaration option was replaced with an admission of not having reasons (“It’s wrong but I can’t think of a reason”). Following each counter-argument, participants were asked if they (still) thought the behaviour was wrong, and if they had a reason for their judgement. There was also a revision to the question on the post-discussion questionnaire asking if participants had changed their judgements was changed: “did your judgement change?” with a binary “yes/no” response option reverted back to “how much did your judgement change?” with a seven point Likert scale response (as in Study 1). The same four dilemmas Heinz, Incest, Cannibal and Trolley (Appendix A) along with the same prepared counter arguments (Appendix B) as in Study 2 were used in Study 3a. Both the MLQ (Steger et al. 2008); and CRSi7 (Huber and Huber 2012) were also used. This study was conducted in a designated psychology computer lab in MIC and was administered entirely on individual computers using OpenSesame (Mathôt, Schreij, and Theeuwes 2012).

Participants were seated, given instructions, and allowed to begin the computer task. The four vignettes from Study 1 Heinz, Incest, Cannibal and Trolley (Appendix A) along with the same pre-prepared counter arguments (Appendix B) were used. Dumbfounding was measured using the critical slide. The updated critical slide contained a statement defending the behaviour and a question as to how the behaviour could be wrong (e.g., “Julie and Mark’s behaviour did not harm anyone, how can there be anything wrong with what they did?”) with three possible response options: ( a ) “There is nothing wrong”; ( b ) “It’s wrong, but I can’t think of a reason”; ( c ) “It’s wrong and I can provide a valid reason”. The order of these response options was randomised. Participants who selected ( c ) were required to provide a reason. The selecting of option ( b ), the admission of not having reasons, was taken to be a dumbfounded response. When participants had completed all questions relating to all four dilemmas they completed the same longer questionnaire as in Studies 1 and 2 containing the MLQ (Steger et al. 2008), the Centrality of Religiosity Scale (Huber and Huber 2012), and some questions relating to demographics. The entire study lasted approximately fifteen to twenty minutes.

Results and Discussion

Participants who selected the admission of not having reasons on the critical slide (option b) were identified as dumbfounded. Forty of the 72 participants (56%) selected the admission of not having reasons at least once. Table 1 shows the ratings of the behaviours across each scenario. Table 2 and Figure 1 show the percentage of participants displaying dumbfounded responses for each dilemma. Table 3 shows the responses to the questionnaires presented between dilemmas. Again there was no statistically significant difference in responses to the critical slide depending on the order of scenario presentation, χ2(6, N = 288) = 0.61, p = .996. There was no difference in responses to the critical slide depending on scenario, χ2(6, N = 288) = 9.6, p = .142, , or, type of scenario (32 instances for intuition scenarios, 27 instances for reasoning scenarios), χ2(2, N = 288) = 4.53, p = .104. Thirteen participants (18.06%) selected the admission of having no reasons for Heinz. Fourteen participants (19.44%) selected the admission of not having reasons for Cannibal and Trolley. Eighteen participants (25%) selected the admission of not having reasons for Incest.

The replacing of an unsupported declaration with an admission of having no reasons led to substantially lower rates of dumbfounding than observed in Study 2. As such, it appears that the issues associated with the selecting of an unsupported declaration have been addressed in Study 3a. However, the rates of dumbfounding observed for Incest and Cannibal in Study 3a were considerably lower than those observed in Study 1. This suggests the revised measure may be too strict, measuring only open admissions of not having reasons, but not accounting for a failure to provide reasons. As in the first computerised task, participants who selected “It’s wrong and I can provide a valid reason” were then required to provide a reason. In order to provide a measure of a failure to provide reasons, these responses were analysed and coded, by the primary researcher. Those containing unsupported declarations were taken as evidence for a failure to provide a reason and identified as dumbfounded responses.

During the coding, another class of dumbfounded response was identified. Participants occasionally provided undefended tautological responses as justification for their judgements, whereby they simply named or described the behaviour in the scenario as justification for their judgement (e.g., “They are related”, “Because it is canibalism” [typographical error in response]). These responses may be viewed as largely equivalent to unsupported declarations (e.g., Mallon and Nichols 2011). In Study 1, they were not identified as dumbfounded responses, because when provided in an interview setting, they were always followed by further questioning. This further questioning could lead to two possible responses: ( a ) a dumbfounded response (unsupported declaration or an admission of not having reasons) or ( b ) an alternative reason. A computerised task does not allow for a follow-up probe to encourage participants to elaborate on such responses. Participants were not placed under time pressure and could articulate and review their typed reason at their own pace. It is reasonable to expect then, that, if participants did have a valid reason for their judgement, they would have provided it along with, or instead of, the undefended tautological response. As such, an undefended tautological reason appears to be evidence of a failure to identify reasons . For this reason, these undefended tautological reasons were also coded as dumbfounded responses, along with the unsupported declarations.

Table 2 and Figure 2 show the number and percentage of dumbfounded responses when the coded string responses are included in the analysis. When the coded string responses are included in the analysis, the number of participants displaying a dumbfounded response at least once increased from 40 (56%) to 57 (79%). Observed rates of dumbfounding increased for each scenario when the coded open-ended responses were included, with 19 participants (26.39%) appearing to be dumbfounded by Heinz, 21 (29.17%) by Cannibal, 31 (43.06%) by Incest, and 22 (30.56%) apparently dumbfounded by Trolley. Still, rates of dumbfounded responding did not vary with type of moral scenario (52 instances for intuition scenarios, 41 instances for reasoning scenarios) being discussed, χ2(1, N = 288) = 1.59, p = .208. There was no association between number of times dumbfounded and score on either measure on the Meaning and Life questionnaire; Presence r(72) = 0.82, p = .413, or Search, r(72) = 0.07, p = .945, or the Centrality of Religiosity Scale r(72) = 1.29, p = .201.

Rates of observed dumbfounding for each scenario across each study, including coded string responses.

When the coded open-ended responses were included in the analysis, the proportion of participants displaying a dumbfounded response at least once in Study 3a (79%) was much closer to that observed in the interview in Study 1 (74%) than before the open-ended responses were included (56%). The variation in observed rates of dumbfounding between dilemmas that was observed in the interview was not present in the computerised task. As such there remains a difference between the dumbfounding elicited during an interview and that elicited as part of a computerised task. However, it is clear that dumbfounded responses can be elicited as part of a computerised task. The participants in Studies 1, 2, and 3a were all college students (largely from the same institution) and as such, the following study investigated the phenomenon in a more diverse sample.

Study 3b: Revised Computerised Task – MTurk

Having successfully elicited dumbfounded responses in a college sample using a computerised task in Study 3a, Study 3b was conducted in an attempt to replicate Study 3a using more diverse sample using online recruiting through MTurk (Amazon Web Services Inc. 2016).

Method

Participants and design

Study 3b was a frequency based, modified replication. The aim was to identify if dumbfounded responding could be evoked. All participants were presented with the same four moral vignettes. Results are primarily descriptive. Further analysis tested for differences in responding depending on the vignette, or type of vignette, presented.

A sample of 101 participants (53 female, 47 male; Mage = 36.58, min = 18, max = 69, SD = 12.45) took part in this study. Participants were recruited online through MTurk (Amazon Web Services Inc. 2016). Participation was voluntary and participants were paid 0.70 US dollars for their participation. Participants were recruited from English speaking countries or from countries where residents generally have a high level of English (e.g., The Netherlands, Denmark, Sweden). Location data for individual participants was not recorded, however, based on other studies, using the same selection criteria, it is likely that 90% of the sample was from the United States.

Procedure and materials

The materials in this study were almost the same as in Study 3a, however, a different software package was used to present the materials and collect the responses. OpenSesame (Mathôt, Schreij, and Theeuwes 2012) was replaced with Questback (Unipark 2013)in order to facilitate online data collection. This meant that the recording of responses changed from keyboard input to mouse input. It also allowed for multiple questions to be displayed on the screen at the same time. Other than these changes, the materials were the same as in Study 3a.

The computer task in Study 3b was much the same as Study 3a. The four vignettes from Study 1: Heinz, Incest, Cannibal, and Trolley (Appendix A) along with the same pre-prepared counter arguments (Appendix B). Dumbfounding was measured using the critical slide.

The critical slide contained a statement defending the behaviour and a question as to how the behaviour could be wrong, with three possible response options: ( a ) “There is nothing wrong”; ( b ) “It’s wrong but I can’t think of a reason”; ( c ) “It’s wrong and I can provide a valid reason”. Participants who selected ( c ) were required to provide a reason. The order of these response options was randomised. When participants had completed all questions relating to all four dilemmas they completed the same longer questionnaire as in Studies 1 and 2 containing the Meaning and Life questionnaire (Steger et al. 2008), the Centrality of Religiosity Scale (Huber and Huber 2012), and some questions relating to demographics. The entire study lasted approximately fifteen to twenty minutes.

Results and Discussion